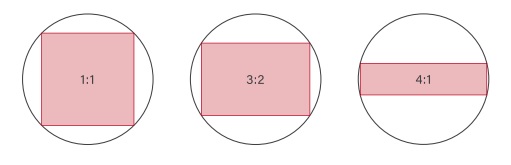

Spatial resolution is determined by the number of pixels in a CMOS or CCD sensor array. While generally speaking “more is better”, what really matters is slightly more complex than that. One needs to know enough about the dimensions and characteristics of the real-world scene at which a camera is directed; and one must know about the smallest feature(s) to be detected.

The sensor-coverage fit of a lens is also relevant. As is the optical quality of the lens. Lighting also impacts the quality of the image. Yada yada.

But independent of lens and lighting, a key guideline is that each minimal real-world feature to be detected should appear in a 3×3 pixel grid in the image. So if the real-world scene is X by Y meters, and the smallest feature to be detected is A by B centimeters, assuming the lens is matched to the sensor and the scene, it’s just a math problem to determine the number of pixels required on the sensor.

There is a comprehensive treatment how to calculate resolution in this short article, including a link there to a resolution calculator. Understanding these concepts will help you to design an imaging system that has enough capacity to solve your application, while not over-engineering a solution – enough is enough.

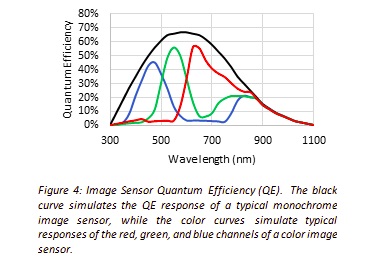

Finally, the above guideline is for monochrome imaging, which to the surprise of newcomers to the field of machine vision, is often more better than color, for effective and cost-efficient outcomes. Certainly some applications are dependent upon color. The guideline for color imaging is that the minimal feature should occupy a 6×6 pixel grid.

If you’d like someone to double-check your calculations, or to prepare the calculations for you, and to recommend sensor, camera and optics, and/or software, the sales engineers at 1stVision have the expertise to support you. Give us some brief idea of your application and we will contact you to discuss camera options.

1st Vision’s sales engineers have an average of 20 years experience to assist in your camera selection. Representing the largest portfolio of industry leading brands in imaging components, we can help you design the optimal vision solution for your application.

1st Vision is the most experienced distributor in the U.S. of machine vision cameras, lenses, frame grabbers, cables, lighting, and software in the industry.