Except for sometimes compelling line-scan imaging, machine vision has been dominated by frame-based approaches. (Compare Area-scan vs. Line-scan). With an area-scan camera, the entire two-dimensional sensor array of x pixels by y pixels is read out and transmitted over the digital interface to the PC host. Whether USB3, GigE, CoaXPress, CameraLink, or any other interface, that’s a lot of image data to transport.

If your application is about motion, why transmit the static pixels?

The question above is intentionally provocative, of course. One might ask, “do I have a choice?” With conventional sensors, one really doesn’t, as their pixels just convert light to electrons according to the physics of CMOS, and readout circuits move the array of charges on down the interface to the host PC, for algorithmic interpretation. There’s nothing wrong with that! Thousands of effective machine vision applications use precisely that frame-based paradigm. Or the line-scan approach, arguably a close cousin of the area-scan model.

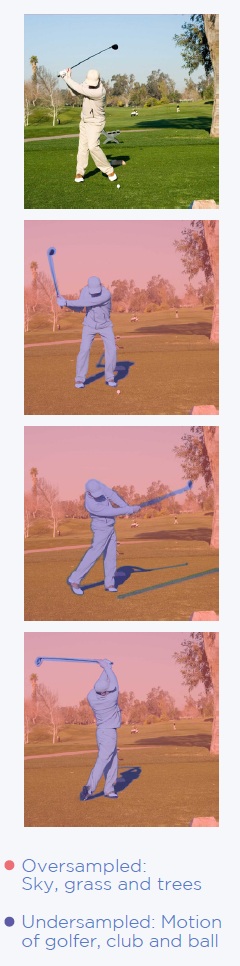

Consider the four-frame sequence to the left, relative to a candidate golf-swing analysis application. Per the legend, with post-processing markup the blue-tinged golfer, club, and ball are undersampled in the sense that there are unshown phases of the swing.

Meanwhile the non-moving tree, grass, and sky are needlessly re-sampled in each frame.

It takes an expensive high-frame-rate sensor and interface to significantly increase the sample rate. Plus storage capacity for each frame. And/or processing capacity – for automated applications – to separate the motion segments from the static segments.

With event-based sensing, introduced below, one can achieve the equivalent of 10k fps – by just transmitting the pixels whose values change.

Images courtesy Prophesee Metavision.

Event-based sensing only transmits the pixels that changed

Unlike photography for social media or commercial advertising, where real-looking images are usually the goal, for machine vision it’s all about effective (automated) applications. In motion-oriented applications, we’re just trying to automatically control the robot arm, drive the car, monitor the secure perimeter, track the intruder(s), monitor the vibration, …

We’re NOT worried about color rendering, pretty images, or the static portions in the field of view (FOV). With event-based sensing, “high temporal imaging” is possible, since one need only pay attention to the pixels whose values change.

Consider the short video below. The left side shows a succession of frame-based images for a machine driven by an electric motor and belt. But the left hand image sequence is not a helpful basis for monitoring vibration with an eye to scheduling (or skipping) maintenance, or anticipating breakdowns.

The right-hand sequence was obtained with an event-based vision sensor (EVS), and absolutely reveals components with both “medium” and “significant” vibration. Here those thresholds have triggered color-mapped pseudo-images, to aid comprehension. But an automated application could map the coordinates to take action, such as gracefully shutting down the machine, scheduling maintenance according to calculated risk, etc.

Another example to help make it real:

Here’s another short video, which brings to mind applications like autonomous vehicles and security. It’s not meant to be pretty – it’s meant to show the sensor detects and transmits just the pixels that correlate to change:

Event-based sensing – it really is a different paradigm

Even (especially?) if you are seasoned at line-scan or area-scan imaging, it’s a paradigm shift to understand event-based sensing. Inspired by human vision, and built on the foundation of neuromorphic engineering, it’s a new technology – and it opens up new kinds of applications. Or alternative ways to address existing ones.

Download the whitepaper and learn more about it! Or fill out our form below – we’ll follow up. Or just call us at 978-474-0044.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution!

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about.

#EVS

#event-based

#neuromorphic