CMOS Image sensors used in machine vision industrial cameras are now the image sensor of choice! But why is this?

CMOS Image sensors used in machine vision industrial cameras are now the image sensor of choice! But why is this?

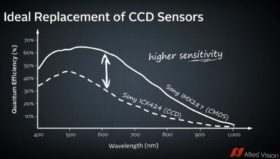

Allied Vision conducted a nice comparison between CCD and CMOS cameras showing the advantages in the latest Manta cameras.

Until recently, CCD was generally recommended for better image quality with the following properties:

- High pixel homogeneity, low fixed pattern noise (FPN)

- Global shutters for machine vision applications requiring very short exposure times

Where in the past, CMOS image sensors were used due to existing advantages:

- High frame rate and less power consumption

- No blooming or smear image artifacts contrary to CCD image sensors

- High Dynamic Range (HDR) modes for acquisition of contrast rich and extremely bright objects.

Today CMOS image sensors offer many more advantages in industrial cameras versus CCD image sensors as detailed below

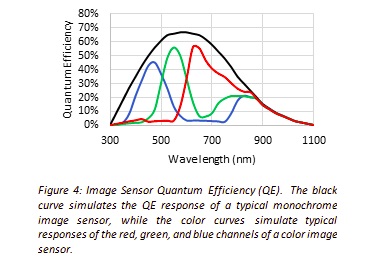

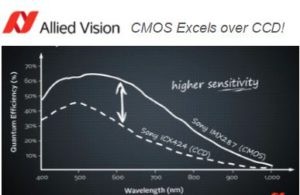

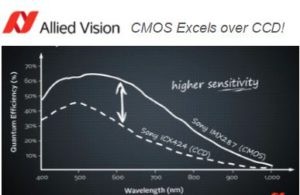

Overall key advantages are better image quality than earlier CMOS sensors due to higher sensitivity, lower dark noise, spatial noise and higher quantum efficiency (QE) as seen in the specifications comparing a CCD and CMOS camera.

Sony ICX655 CCD vs a Sony IMX264 CMOS sensor

Sony ICX655 CCD vs a Sony IMX264 CMOS sensor

Comparing the specifications between CCD and CMOS industrial cameras, the advantages are clear.

- Higher Quantum Efficiency (QE) – 64% vs 49% where higher is better in converting photons to electrons.

- Pixel well depth (ue.sat: ) – 10613 electrons (e-) vs 6600 e- where a higher well depth is beneficial

- Dynamic range (DYN) – Where CMOS provides almost +17 dB more dynamic range. This is a partial result of the pixel well depth along with low noise.

- Dark Noise: CMOS is significantly less vs CCD with only 2 electrons vs 12!

Images are always worth a thousand words! Below are several comparison images contrasting the latest Allied Vision CMOS industrial cameras vs CCD industrial cameras.

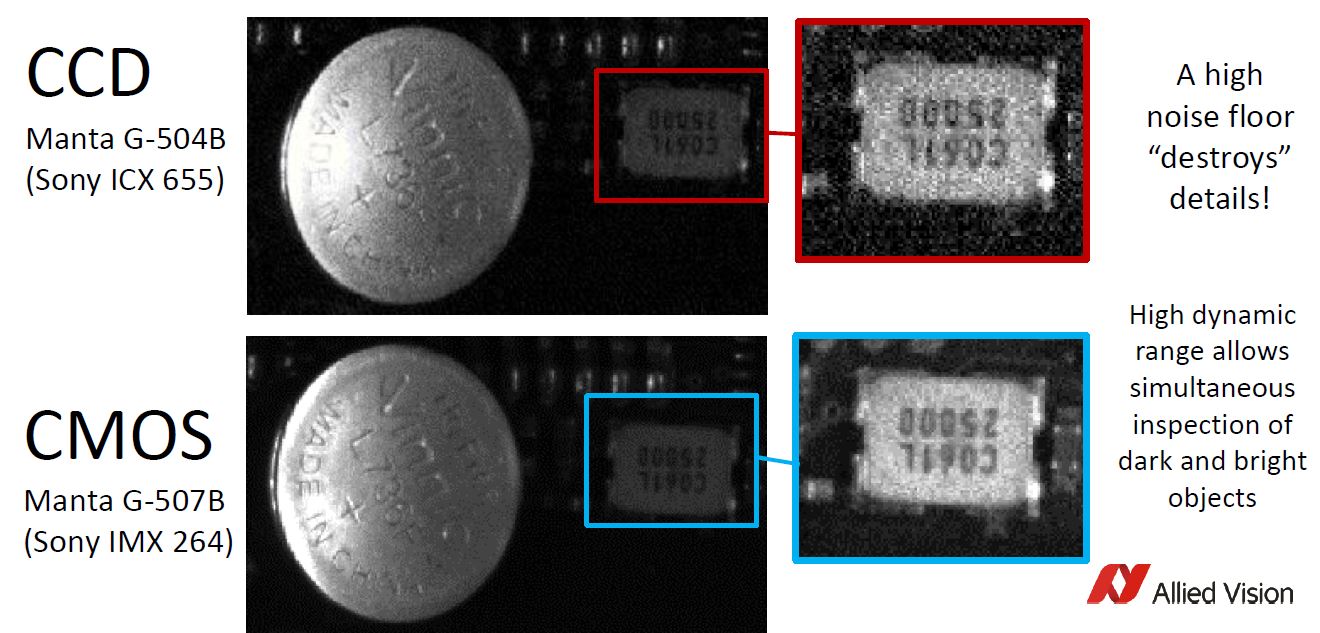

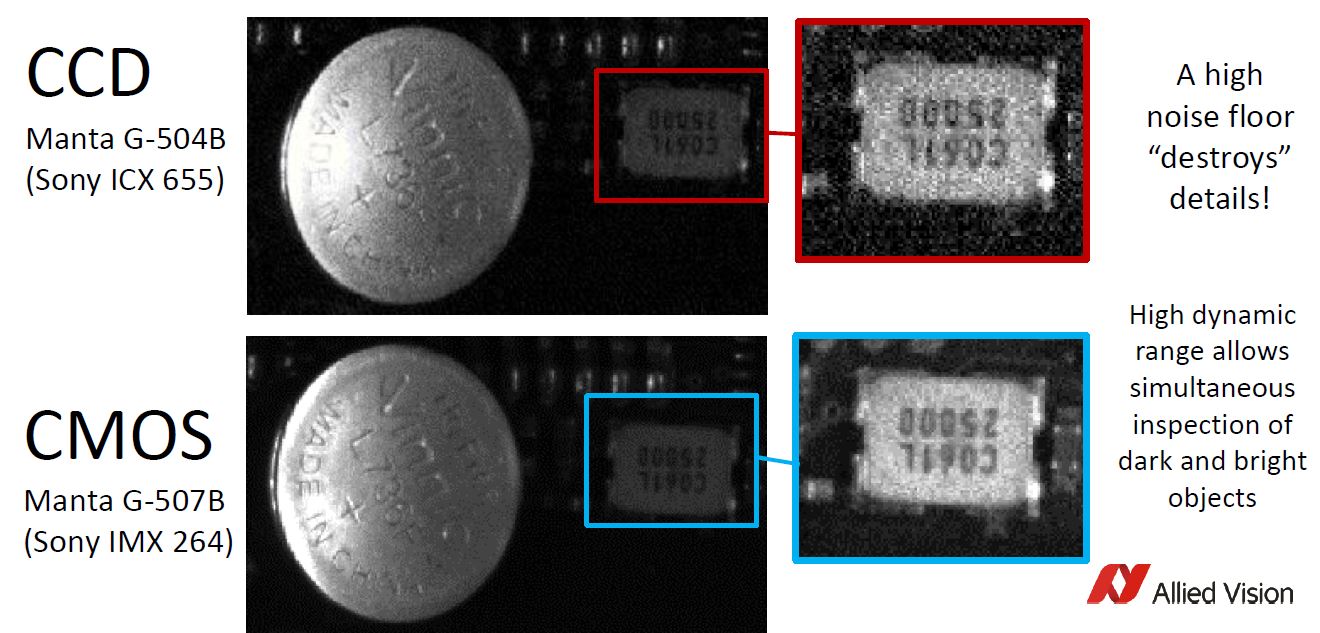

Dynamic Range of today’s CMOS image sensors are contributed to several of the characteristics above and can provide higher fidelity images with better dynamic range and lower dark noise as seen in this image comparison of a couple of electronics parts

The comparison above illustrates how higher contrast can be achieved with high dynamic range and low noise in the latest CMOS industrial cameras

The comparison above illustrates how higher contrast can be achieved with high dynamic range and low noise in the latest CMOS industrial cameras

- High noise in the CCD image causes low contrast between characters on the integrated circuit, whereas the CMOS sensor provides higher contrast.

- Increased Dynamic range from the CMOS image allows darker and brighter areas in an image to be seen. The battery (left part) is not as saturated vs the CCD image allowing more detail to be observed.

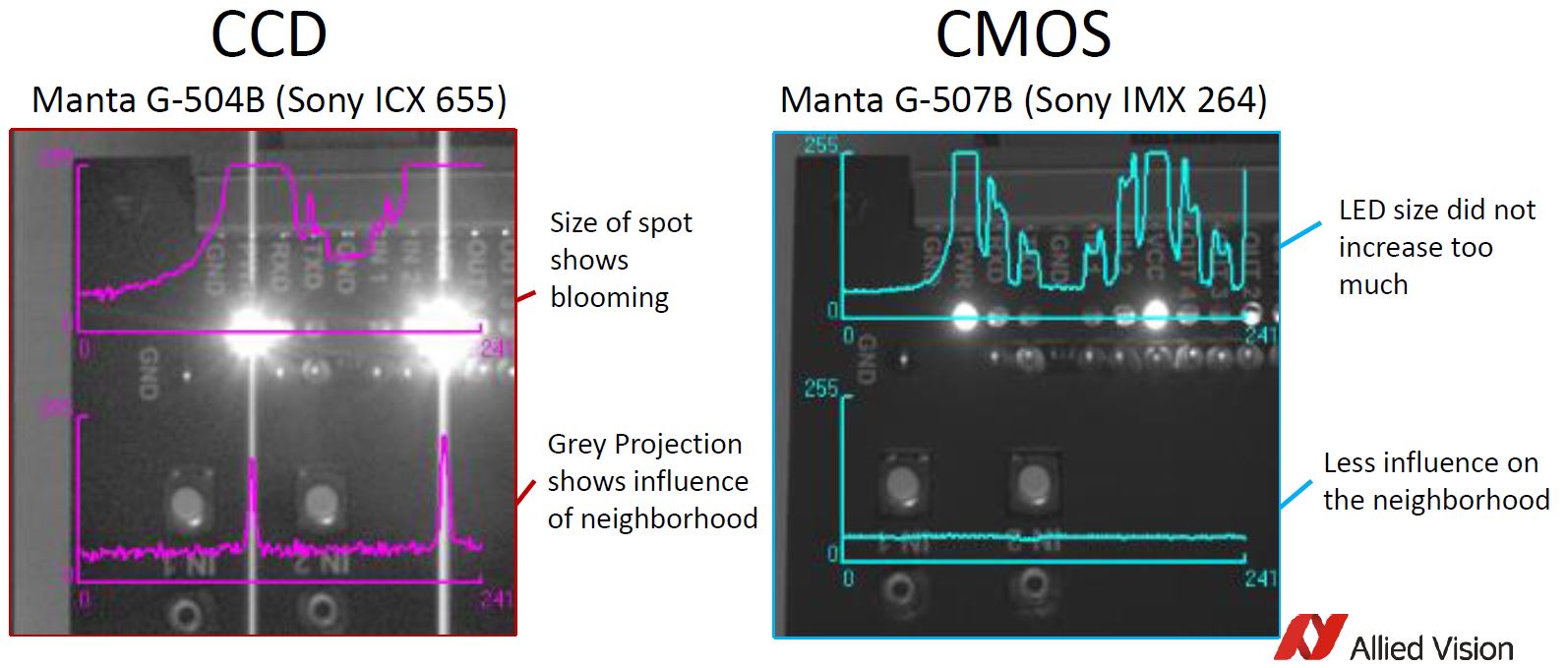

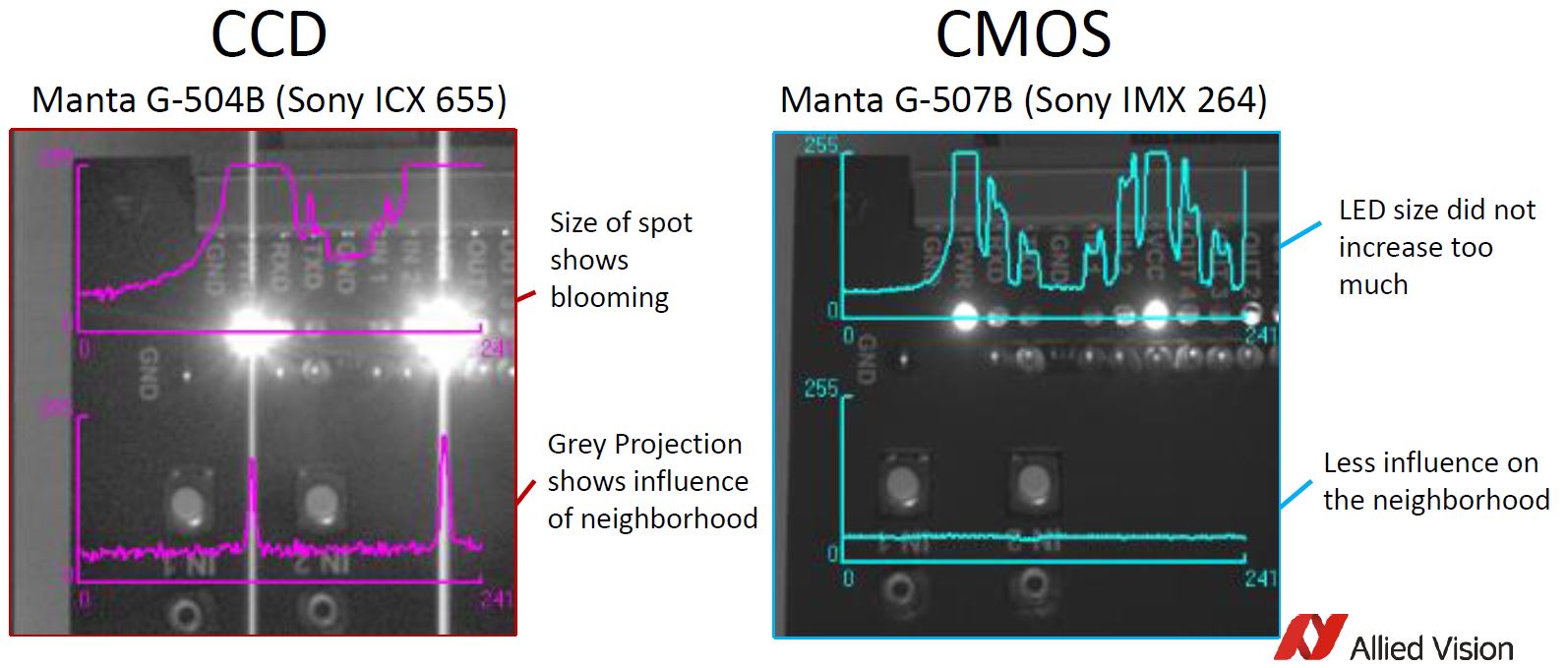

Current CMOS image sensors eliminate several artifacts and provide more useful images for processing. The images below are an example of a PCB with LEDs illuminated imaged with a CCD vs CMOS industrial camera

CMOS images will result in less blooming of bright areas (LED’s for example in the image), smearing (vertical lines seen in the CCD image) and lower noise (as seen in the darker areas, providing higher overall contrast)

CMOS images will result in less blooming of bright areas (LED’s for example in the image), smearing (vertical lines seen in the CCD image) and lower noise (as seen in the darker areas, providing higher overall contrast)

- Smearing (vertical lines seen in the CCD image) are eliminated with CMOS. Smear has inherently been a bad artifact of CCDs.

- Dynamic Range inherent to CMOS sensors allow the LED’s to not saturates as much as the CCD allowing more detail to be seen.

- Lower noise in the CMOS image, as seen in the bottom line graph shows a cleaner image.

More advantages of new CMOS image sensors include:

- Higher frame rates and shutter speeds over CCD resulting in less image blur in fast moving objects.

- Much lower cost of CMOS sensors translate into much lower cost cameras!

- Improved global shutter efficiency.

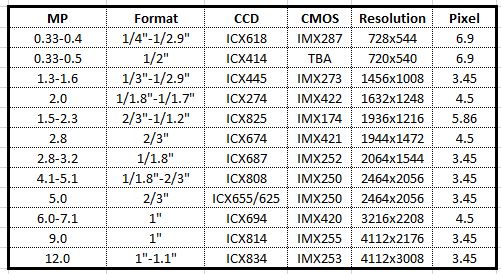

CMOS image sensor manufacturers are also working to design sensors that easily replace CCD sensors making for an easy transition which results in lower cost and better performance. Allied Vision has several new cameras replacing current CCD’s with more to come! Below are a few popular cameras / image sensors that have been recently crossed over to CMOS image sensors

Sony ICX424 and Sony ICX445 (1/3″ sensor) found in the Manta G-032 and Manta G-125 cameras are now replaced by the Sony IMX273 in the Manta G-158 camera keeping the same sensors size. (Read more here)

Sony ICX424 (1/3″sensor), can also be replaced by the Sony IMX287 (1/2.9″ sensor) with pixel sizes of 6.9um closely matching the older IMX424 having 7.4um pixels. Allied Vision Manta G-040 is a nice solution with all the benefits of the latest CMOS image sensor technology. View the short videos below for the highlights.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera selection. With a large portfolio of lenses, cables, NIC card and industrial computers, we can provide a full vision solution!

Related Posts

What are the attributes to consider when selecting a camera and its performance?

Allied Vision Manta G-040 & G-158 provide great replacements to legacy CCD cameras

Upgrade your 5MP CCD (Sony ICX625) camera for higher performance with an Allied Vision Mako G-507 (IMX264)