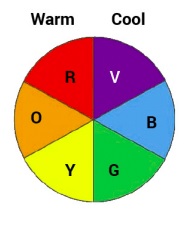

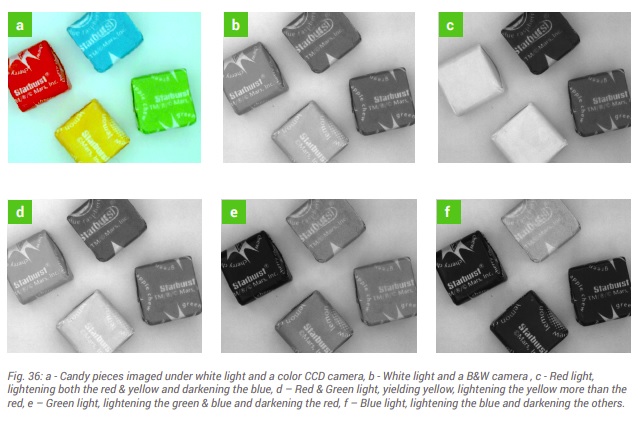

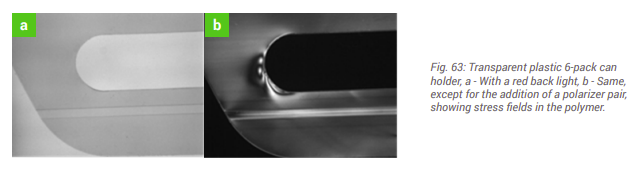

Seasoned machine vision practitioners know that while the sensor and the optics are important, so too is lighting design. Unless an application is so “easy” that ambient light is enough, many or most value-added applications require supplemental light. “White” light is what comes to mind first, since it’s what we humans experience most. But narrow-band light – whether colored light within the visible spectrum, or non-visible light with a sensor attuned to those frequencies – is sometimes the key to maximizing contrast.

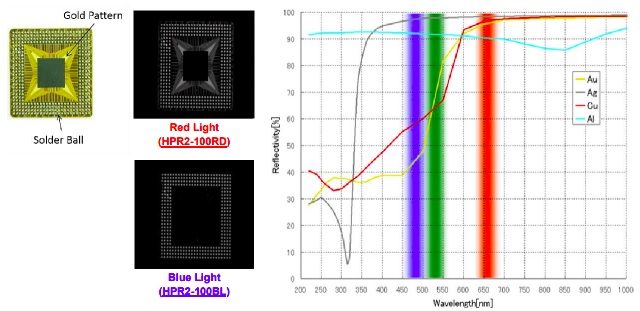

In the illustrations above, suppose we have an application to do feature identification for gold contacts. The ideal contrast to create is where gold features “pop” and everything that’s not gold fails to appear at all, or at most very faintly. If the targets that will come into the field of view have known properties, one can often do lighting design to achieve precisely such optimal outcomes

In this example, consider the white light image in the top left, and then the over-under images created with red and blue light respectively. The white light image shows “everything” but doesn’t really isolate the gold components. The red light does a great job showing just the gold (Au). The blue light emphasizes silver (Ag). The graph to the right shows four common metals relative to how they respond under which (visible) wavelengths. Good to know!

For an illustrated nine-page treatment of how various wavelengths improve contrast for specific materials or applications, download this Wavelength Guide from our Knowledge Base. You may be able to self-diagnose the wavelength ideal for your application. Or you may prefer to just call us at 978-474-0044, and we can guide you to a solution.

To the left we see 5 plastic contact lens packages, in white light. Presence/absence detection is inconclusive. Image courtesy of CCS America.

With UV light, a presence/absence quality control check can be programmed based on a rule that presence = 30% or more of the area in each round renders as black. Image courtesy of CCS America.

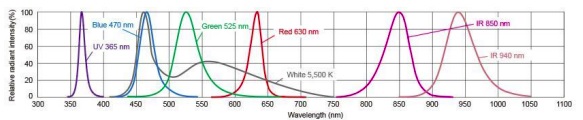

It all comes down to the reflection or absorption characteristics of specific properties with respect to certain wavelengths. Below we see a chart showing the peaks of some of the more commonly used wavelengths in machine vision.

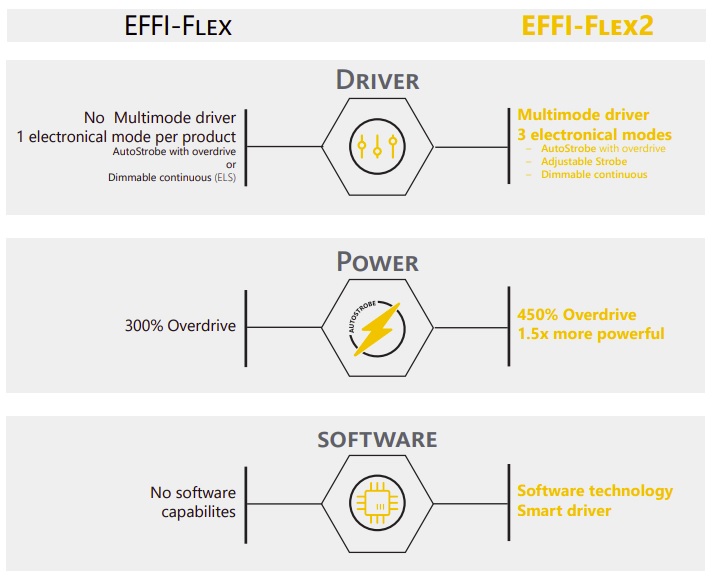

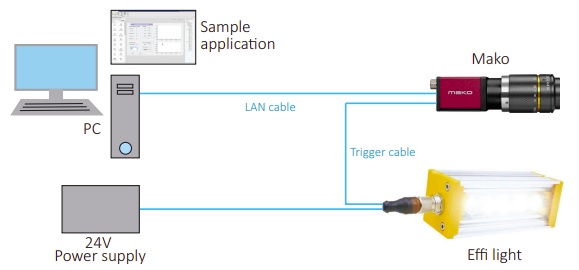

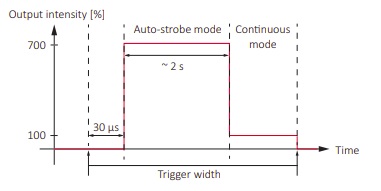

For more details on enhancing contrast via lighting at specific wavelengths, download this Wavelength Guide from our Knowledge Base. Or click on Contact Us so we can discuss your application and guide you. 1stVision has several partners with different lighting geometries and wavelengths to create contrast. All three partners are in the same business group. CCS America and Effilux offer a variety of wavelengths (UV through NIR) and light formats (ring light, back light, bar light, dome). Gardasoft has full offerings for lighting controls. Tell us about your application and we’ll help you design an optimal solution.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of lenses, cables, NIC card and industrial computers, we can provide a full vision solution!