This is not some blue-sky puff piece about how AI may one day be better / faster / cheaper at doing almost anything at least in certain domains of expertise. This is about how AI is already better / faster / cheaper at doing certain things in the field of machine vision – today.

Conventional machine vision

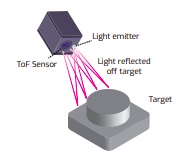

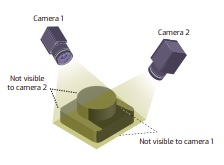

There are classical machine vision tools and methods, like edge detection, for which AI has nothing new to add. If the edge detection algorithm is working fine as programmed in your vision software, who needs AI? If it ain’t broke, don’t fix it. Presence / absence detection, 3D height calculation, and many other imaging techniques work just fine without AI. Fair enough.

From image processing to image recognition

As any branch of human activity evolves, the fundamental building blocks serve as foundations for higher-order operations that bring more value. Civil engineers build bridges, confident the underlying physics and materials science lets them choose among arch, suspension, cantilever, or cable-stayed designs.

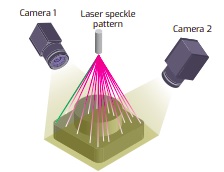

So too with machine vision. As the field matures, value-added applications can be created by moving up the chunking level. The low-level tools still include edge-detection, for example, but we’d like to create application-level capabilities that solve problems without us having to tediously program up from the feature-detection level.

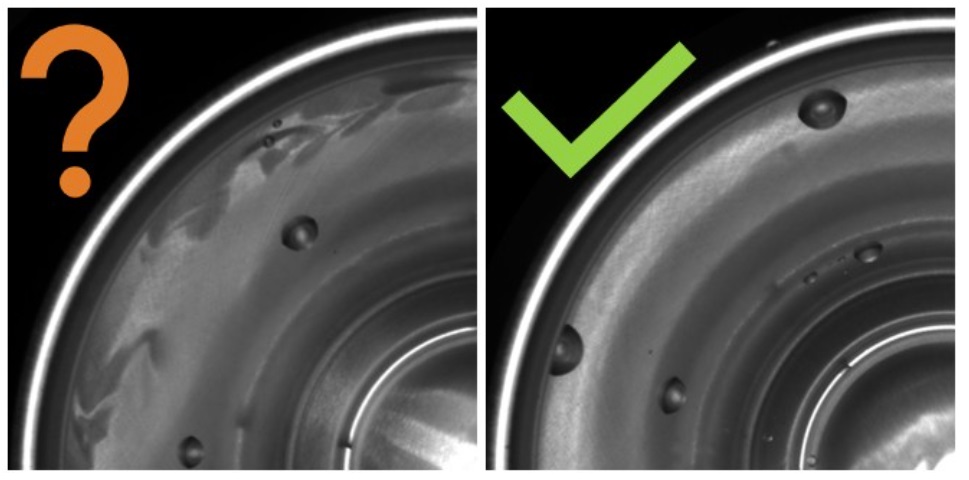

| Traditional Machine Vision Tools | AI Classification Algorithm |

| – Can’t discern surface damage vs water droplets | – Ignores water droplets |

| – Are challenged by shading and perspective changes | – Invariant to surface changes and perspective |

Briefly in the human cognition realm

Let’s tee this up with a scenario from human image recognition. Suppose you are driving your car along a quiet residential street. Up ahead you see a child run from a yard, across the sidewalk, and into the street.

While it may well be that the rods and cones in your retina, and your visual cortex, and your brain used edge detection to process contrasting image segments to arrive at “biped mammal” – child, , and on to evaluating risk and hitting the brakes – isn’t how we usually talk about defensive driving. We just think in terms of accident avoidance, situational awareness, and braking/swerving – at a very high level.

Applications that behave intelligently

That’s how we increasingly would like our imaging applications to behave – intelligently and at a high level. We’re not claiming it’s “human equivalent” intelligence, or that the AI method is the same as the human method. All we’re saying is that AI, when well-managed and tested, has become a branch of engineering that can deliver effective results.

So as autonomous vehicles come to market of course we want to be sure sufficient testing and certification is completed, as a matter of safety. But whether the safe-driving outcome is based on “AI” or “vision engineering”, or the melding of the two, what matters is the continuous sequence of system outputs like: “reduce following distance”, “swerve left 30 degrees”, and “brake hard”.

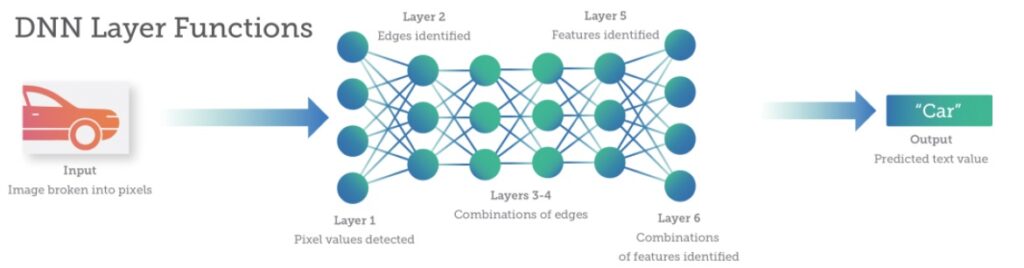

Neural Networks

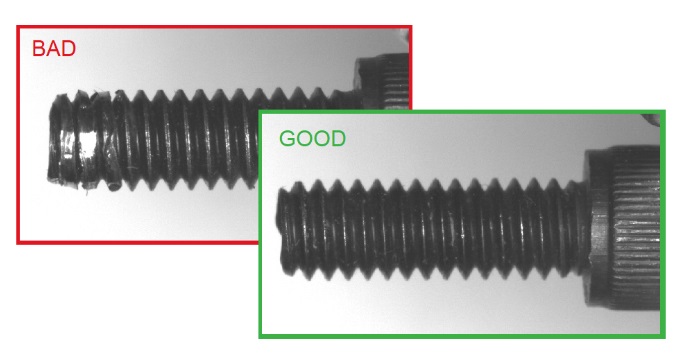

One branch of AI, neural networks, has proven effective in many “recognition” and categorization applications. Is the thing being imaged an example of what we’re looking for, or can it be dismissed? If it is the sort of thing we’re looking for, is it of sub-type x, y, or z? “Good” item – retain. “Bad” item – reject. You get the idea.

From training to inference

With neural networks, instead of programming algorithms at a granular feature analysis level, one trains the network. Training may include showing “good” vs. “bad” images – without having to articulate what makes them good or bad – and letting the network infer the essential characteristics. In fact it’s sometimes possible to train only with “good” examples – in which case anomaly detection flags production images that deviate from the trained pool of good ones.

Enough theory – what products actually do this?

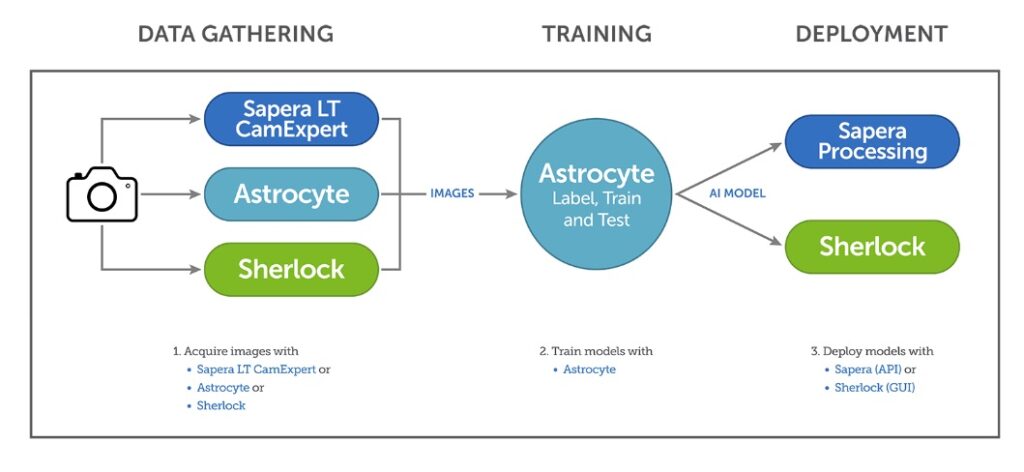

Teledyne DALSA Astrocyte software creates a deep neural network to perform a desired task. More accurately – Astrocyte provides a graphical user interface (GUI) and a neural network framework, such that an application-specific neural network can be developed by training it on sample images. With a suitable collection of images, Teledyne DALSA Astrocyte can create an effective AI model in under 10 minutes!

Mix and match tools

In the diagram above, we show an “all DALSA” tools view, for those who may already have expertise in either Sapera or Sherlock SDKs. But one can mix and match. Images may alternatively be acquired with third party tools – paid or open source. And one may not need rules-based processing beyond the neural network. Astrocyte builds the neural network at the heart of the application.

User-friendly AI

The key value proposition with Teledyne DALSA Astrocyte is that it’s user-friendly AI. The GUI used to configure the training and to validate the model requires no programming. And one doesn’t need special training in AI. Sure, it’s worth reading about the deep learning architectures supported. They include: Classification, Anomaly Detection, Object Detection, and Segmentation. And you’ll want to understand how the training and validation work. It’s powerful – it’s built by Teledyne DALSA’s software engineers standing on the shoulders of neural network researchers – but you don’t have to be a rocket scientist to add value in your field of work.

1st Vision’s sales engineers have over 100 years of combined experience to assist in your camera and components selection. With a large portfolio of cameras, lenses, cables, NIC cards and industrial computers, we can provide a full vision solution! We’re big enough to carry the best cameras, and small enough to care about every image.

About you: We want to hear from you! We’ve built our brand on our know-how and like to educate the marketplace on imaging technology topics… What would you like to hear about?… Drop a line to info@1stvision.com with what topics you’d like to know more about.